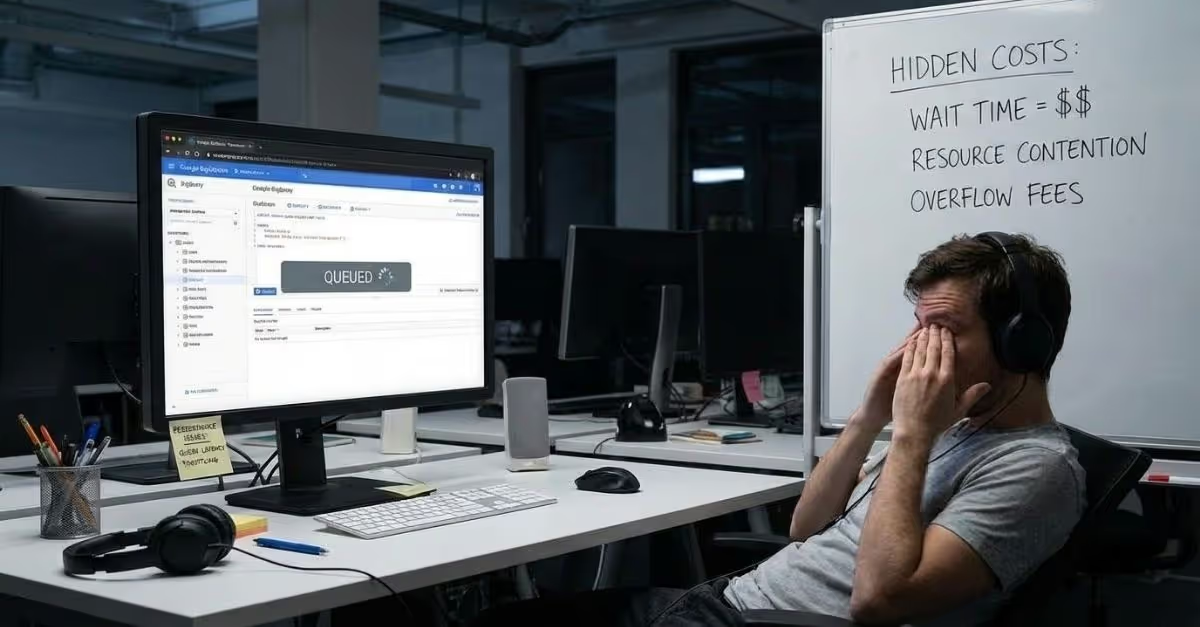

Google BigQuery’s serverless design, elastic scalability, and pay-as-you-go pricing make it one of the most widely adopted cloud data warehouses. But as data volumes and user concurrency increase, many organizations encounter a costly and often misunderstood challenge: BigQuery queuing.

Queries that should complete in seconds suddenly take minutes (or sometimes even hours). Cloud costs rise without delivering proportional business value due to various reason such as:

- Dashboard lag

- Missed pipeline SLAs

For many teams, queuing becomes the silent performance killer hiding inside their BigQuery environment.

What Is Google BigQuery Queuing?

BigQuery queuing occurs when queries are forced to wait for compute resources (known as slots) to become available. Each query requires a certain number of slots depending on its complexity, data volume, joins, shuffles, and aggregations.

.avif)

When total slot demand exceeds available capacity, BigQuery places queries in a queue. This condition is commonly referred to as slot contention.

Queuing is most prevalent in:

- On-demand pricing models with high concurrency

- Flat-rate or reservation-based environments with misallocated slots

- Organizations running a mix of ad-hoc analytics, BI dashboards, and scheduled ETL jobs

.avif)

scale slots from baselines of 700 and 300 respectively.

Why does Queuing Happen in Google BigQuery?

BigQuery separates storage and compute, enabling users to query petabyte-scale data without infrastructure management. Compute is provisioned via slots that are dynamically allocated or reserved.

This architecture delivers incredible flexibility, but it also introduces certain risks:

- Slot demand can spike unpredictably

- Concurrent workloads often overlap

- Poorly optimized queries consume excessive compute

When these factors collide, queuing becomes inevitable.

Common Causes of BigQuery Queuing, and Slot Contention

1. Peak Concurrency Spikes

Analyst queries, scheduled transformations, and BI dashboard refreshes frequently run at the same time (especially during business hours).

2. Inefficient SQL Queries

Full table scans, unnecessary joins, excessive shuffling, and lack of partitioning or clustering dramatically increase slot consumption.

3. On-Demand Slot Limits

BigQuery on-demand projects typically cap concurrent slots (around 2,000 per project), which can be exhausted quickly in data-heavy environments.

4. Reservation Mismanagement

In flat-rate models, unused or fragmented reservations create artificial shortages even when total capacity appears sufficient.

5. Bursts in Ad-Hoc BI Usage

Unplanned queries from Looker, Tableau, or direct SQL access create unpredictable demand patterns that are hard to manage manually.

The Business Impact of BigQuery Queuing

BigQuery queuing affects far more than query speed:

Slower Analytics and Dashboards

Business users lose trust when

dashboards lag or fail to refresh on time.

Higher Cloud Costs

Re-submitting jobs during BigQuery queuing increases

compute resource wastage. Duplicate executions delay

teams, erode data trust, and negatively impact decision

speed, efficiency, and overall ROI.

Lower Throughput Efficiency

Slot contention reduces overall system

efficiency, wasting compute resources.

Increased Operational Burden

Engineering teams spend hours analyzing

INFORMATION_SCHEMA views and

Cloud Monitoring metrics.

Forced Overprovisioning

Many teams respond by purchasing

more slots, often overspending

instead of fixing root causes.

In severe cases, queuing leads to query timeouts, failed jobs, and broken pipelines.

Why Do Traditional Methods of Reducing BigQuery Queuing Fall Short?

Google BigQuery offers powerful built-in features to handle query queuing and slot contention, helping data teams maintain performance in a serverless data warehouse. These include:

- Query Queues(generally available since September 2023):

Dynamically adjusts concurrency limits and queues excess queries to prevent immediate failures, supporting up to 1,000 interactive and 20,000 batch queries per project per region. - Reservations and Autoscaling:

Delivers dedicated baseline capacity with on-demand burst scaling for handling workload spikes. - Manual Query Optimization:

Techniques like table partitioning, clustering, and SQL refactoring to lower slot consumption per query.

These tools mark significant improvements, reducing query failures and enabling better resource allocation during peaks.

Yet, as organizations scale their data operations in 2025, many find these native approaches insufficient for fully eliminating queuing issues. Here's why they often come up short in fast-paced, high-volume environments:

1.Primarily Reactive Approach: Native tools kick in after contention starts. Queries still queue during unexpected surges from ad-hoc analytics or ETL bursts, resulting in delays that frustrate users and slow business insights. Autoscaling reacts to current load but lacks true predictive capabilities, while monitoring via Cloud Monitoring or INFORMATION_SCHEMA.JOBS reveals problems only after dashboards lag or complaints roll in.

2. Heavy Reliance on Specialized Expertise: Getting the most from these features requires in-depth BigQuery knowledge. Accurately sizing reservations involves forecasting peak slot needs (if you overestimate - you waste budget; underestimate and queuing persists). Configuring concurrency targets or analyzing query execution plans demands familiarity with fair scheduling and stage timelines, creating dependencies on scarce data engineering talent.

.avif)

3. Ongoing Maintenance Overhead: Data workloads aren't static. They evolve with new BI tools, expanding datasets, and shifting query patterns. Teams must continually monitor utilization, tweak reservations, and audit inefficient queries, pulling focus from strategic work. While autoscaling manages bursts effectively, it doesn't proactively address persistent issues like poorly optimized joins or excessive shuffling.

In summary, BigQuery's native tools provide a reliable foundation for controlling query queuing and slot contention, but their reactive nature, expertise requirements, and manual demands make them challenging to sustain at scale. For growing teams facing unpredictable costs and performance dips, this gap often drives the search for more.

How Revefi Solves BigQuery Queuing Autonomously

Revefi is an AI-driven cloud cost optimization platform purpose-built for cloud data warehouses, including Google BigQuery, Snowflake, Databricks, and AWS Redshift.

At the core of the platform is RADEN, an autonomous AI agent that continuously optimizes cost, performance, and reliability.

How Does Revefi’s AI Agent Eliminate BigQuery Queuing

- Real-Time Detection

RADEN identifies slot contention and query slowdowns as they happen. - Automated Root Cause Analysis

It determines whether queuing is driven by inefficient queries, workload spikes, reservation gaps, or ad-hoc BI usage. - Actionable Optimization

Revefi recommends (and can automate) query prioritization, workload balancing, reservation consolidation, and SQL improvements.

Proactive Cost Control

When queuing is not present, Revefi ensures compute resources are not overprovisioned, preventing unnecessary spend.

Additional BigQuery Optimization Benefits with Revefi

Beyond queuing, Revefi helps organizations:

- Reduce excessive data shuffling that inflates BigQuery costs

- Separate ad-hoc and scheduled workloads to prevent contention

- Identify stale tables and inefficient transformations

- Optimize underutilized reservations across teams

Customers report:

- Up to 60% reduction in data platform costs

- 10x improvement in operational efficiency

- Faster time-to-value with minimal setup

Revefi is trusted by global enterprises and recognized as a 2025 Gartner Cool Vendor.

The Future of BigQuery Optimization Is Autonomous

Apart from being a performance issue, Google BigQuery queuing is also a cost, scalability, and productivity problem. As data usage grows, relying on manual tuning or reactive tools becomes unsustainable.

AI-powered agents (like Revefi’s RADEN) represent the next generation of Google BigQuery cost optimization by being continuous, autonomous, and proactive. By eliminating queuing at its source, organizations regain performance, control costs, and future-proof their data platforms.

If slow queries and unpredictable BigQuery costs are holding your teams back, it’s time to let AI take over.