Last week, I participated in a live panel on "The Hidden Costs of Poor Data Quality in AI ” with colleagues from the Data management community and a live audience during which we discussed critical issues related to data quality for successful AI outcomes and how AI teams are starting to address them.

Why is Data Quality the Single Most Important Factor in AI Success?

In the current "AI gold rush," the industry has focused heavily on frontier models and applications, often neglecting the critical "middle tier", the $3 trillion economy of applications that connects them. This oversight is dangerous because AI models function as amplifiers; unlike traditional Business Intelligence tools where a data error might simply skew a single report, but a biased or flawed AI model propagates those errors to thousands of users instantly.

To prevent this, we must shift our focus from simple data quality to "AI-ready" data, which combines quality with governance and context. It is not necessary to have perfect data for every single scenario, but the data must be fit for the specific use case to ensure safety. Without this contextual rigor, the fundamental models we build will only serve to amplify bad data and widen the blast radius of errors.

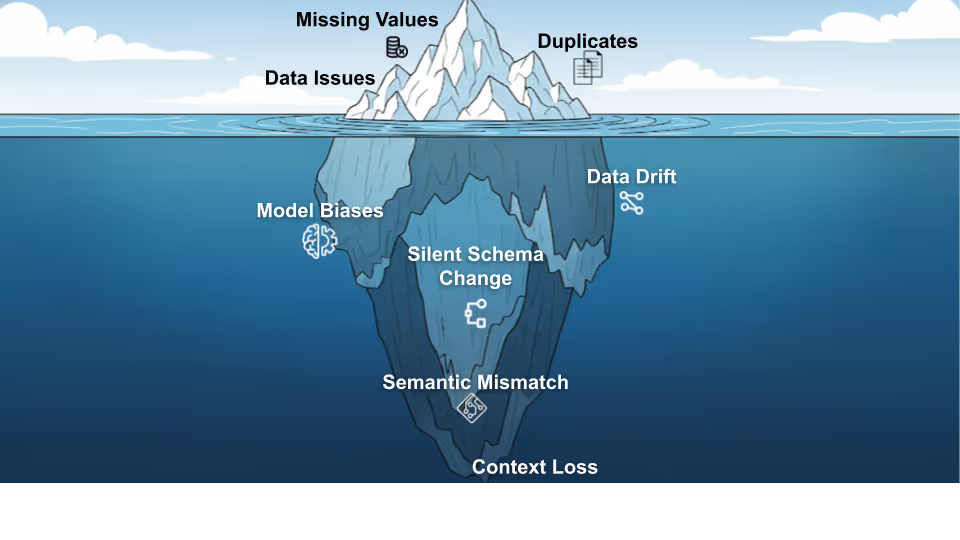

The "Unknown Unknowns": Identifying Dangerous Data Quality Issues

We are increasingly seeing the rise of "unknown unknowns," or silent data failures, where pipelines appear to run successfully and dashboards remain green, yet the underlying data is corrupt or fundamentally flawed. This issue is exacerbated in regulated industries where legacy data is often dated and lacks the timeliness required for modern, dynamic AI applications.

These silent failures are particularly insidious because they do not trigger standard alerts, allowing issues like data bias and staleness to persist unnoticed until they cause downstream damage. While we have become good at managing "known unknowns" with traditional pillars of quality, the real threat to AI performance lies in these undetected failures that traditional monitoring tools often miss.

Resolving these requires a modern approach that uses AI to autonomously monitor AI, learning normal data patterns to proactively flag "unknown unknowns" before they corrupt a model.

How do errors and biases in training data impact models?

AI has a compounding effect on data quality, as errors and biases in training data are not just replicated but worsened as they are processed by the model. To combat this, we must move toward automating the understanding of data relevance, freshness, and lineage, ensuring that we proactively identify whether we have the right data for the right use case before it ever reaches the inference layer.

Addressing bias effectively also requires breaking down organizational silos and adopting a collaborative approach, such as establishing a centralized AI Council. We cannot rely on isolated technical teams to solve these systemic issues; instead, we need organization-wide visibility and cooperation to ensure that issues around fairness and bias are addressed holistically across the enterprise.

Escaping the "Franken-stack": Best Practices for Continuous Data Quality

We are witnessing a generational shift in technology that allows us to move beyond the legacy data quality practices of the past 30 years. By adopting modern DevOps, DataOps, MLOps and LLMOps together, organizations can achieve a "yin and yang" balance where they not only monitor data quality but also optimize the deployment and underlying infrastructure and costs simultaneously.

Organizations often fall into the "Tool Trap," creating a "Franken-stack" by buying separate tools for lineage, validation, pipeline monitoring, and FinOps. This turns data engineers into "data plumbers" and crushes them with the "high interest credit card of technical debt" from integrating these disparate systems. The "best tool" is not a tool; it's a single, "self-driving" platform that unifies FinOps, DataOps, and Observability in one "seamless integration".

Enforcing Standards: The Solvable Tension Between Culture and Tooling

While measuring data quality against classic pillars is relatively straightforward, ensuring consistent enforcement across diverse departments is a significant challenge. Often, different teams have different definitions for the same metrics, leading to a disconnect that can only be resolved through a combination of strong cultural collaboration and technological consistency.

Organizations face a 'culture vs. tooling' problem. Measuring quality dimensions like Completeness or Uniqueness is easy, but enforcement is where things fall apart. Legacy approaches fail: a central "Master Data team" creates a bottleneck, while making quality "everyone's job" leads to a diffusion of responsibility. The only way to enforce standards is to "automate them and make them visible" via a unified platform that acts as the "enforcer" and "single source of truth". By automating monitoring and remediation, we can bridge the gaps between teams, ensuring that standards are adopted universally and that high-quality, governed data becomes the default across the entire organization.

The Role of Data Observability

Data observability is the proactive, AI-driven evolution of traditional data monitoring. Legacy monitoring is "static, rule-based, and reactive," and crucially, it only monitors pipeline performance (is it "on" or "off"?) while missing the "silent data failures" in the data itself. Data Observability augments and extends Data Quality practices.

Data Observability fills this gap by providing an "end-to-end view of data's health," learning its "typical data behavior patterns" to catch "unforeseen exceptions". Effective implementation involves instrumenting pipelines, monitoring for drift, using anomaly detectors instead of static thresholds, and maintaining lineage for traceability.1 This approach reduces silent failures and builds the trust required to scale AI.

Adopting Modern Governance: Balancing Accessibility, Accuracy, and Compliance

To balance 'Guardrails vs. Innovation,' a governance framework cannot be a "3-ring binder of rules"; it must be a "living, automated system". As the lines between data governance and AI governance continue to blur, organizations should adopt neutral, comprehensive standards like the NIST Risk Management Framework rather than relying on vendor-specific definitions. But frameworks like the NIST AI RMF provide a great structure (Govern, Map, Measure, Manage), but they fail because the 'Measure' and 'Manage' functions are continuous, 24/7 tasks that "cannot be done manually".

A potential solution is an 'active metadata' engine that automates "policy enforcement, lineage tracking, and quality monitoring" which operationalizes the framework, turning governance from a cost center that says "no" into an accelerator that enables teams to move fast, safely. In this new AI era, governance must be at the forefront of our strategy to ensure that the data feeding our models is trustworthy, accurately measured, and managed with a clear understanding of its lineage and risks.

Man vs. Machine: The Future of Automation and Human-in-the-Loop Oversight

The "automation vs. human" debate for enduring data quality is a "false dichotomy"; the future is a partnership. Automation is not optional; it is a necessity for scale, handling the 99% of "routine cases" that are "impractical" for humans.

This "irreplaceable" human oversight provides the judgment for the 1% of ambiguity, handling "nuanced decisions, such as detecting bias or making ethical judgments" in "high-stakes situations". The goal of automation is not to replace humans but to elevate them, freeing up resources from manual intervention for strategic initiatives.

A Final Recommendation

Treat data-quality as your strategic foundation for AI. Invest first in measuring, monitoring and governing your data, spend and pipelines, then build your AI models on that trusted base to scale reliably and realize ROI. This reframes data quality as a proactive, strategic asset, not a "reactive, IT cleanup task".

You can view the entire webinar here: The Hidden Costs of Poor Data Quality in AI