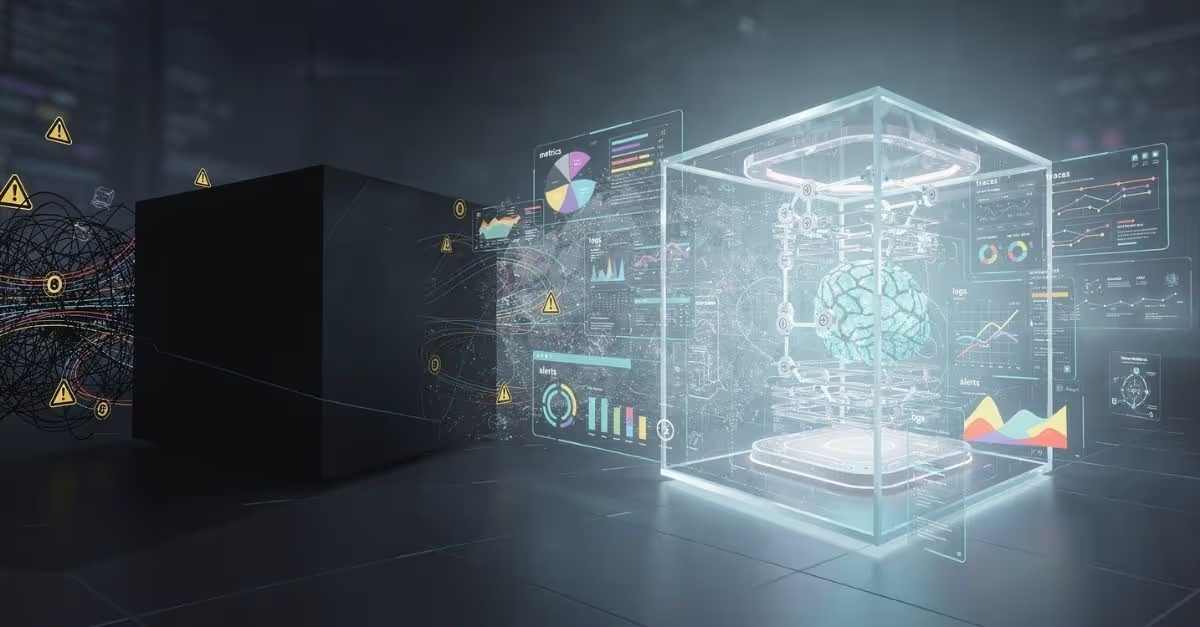

As AI moves from prototype to production, the challenge shifts from algorithms, LLMs to operations. Last week, I participated in a live panel on "From Black Box to Glass Box," where we explored why AI-ready data, Cost Observability and Data Observability are the missing links for scalable, trustworthy AI. Here are my key takeaways from that discussion.

The Critical Overlap: Data Observability vs. AI Observability

Understanding how data observability and AI observability differ (and how they work together) is essential. Data observability looks upstream, monitoring the health of the data ecosystem, including pipeline freshness, schema changes, and data lineage. AI observability operates downstream at the model layer, focusing on issues such as model drift, explainability, and bias detection.

Many AI model failures originate from upstream data problems. For example, a model’s performance can degrade simply because a critical join key was removed during an ETL process. A unified approach, like Revefi, brings these layers together, creating a transparent system where every model output can be traced back through its complete data lineage.

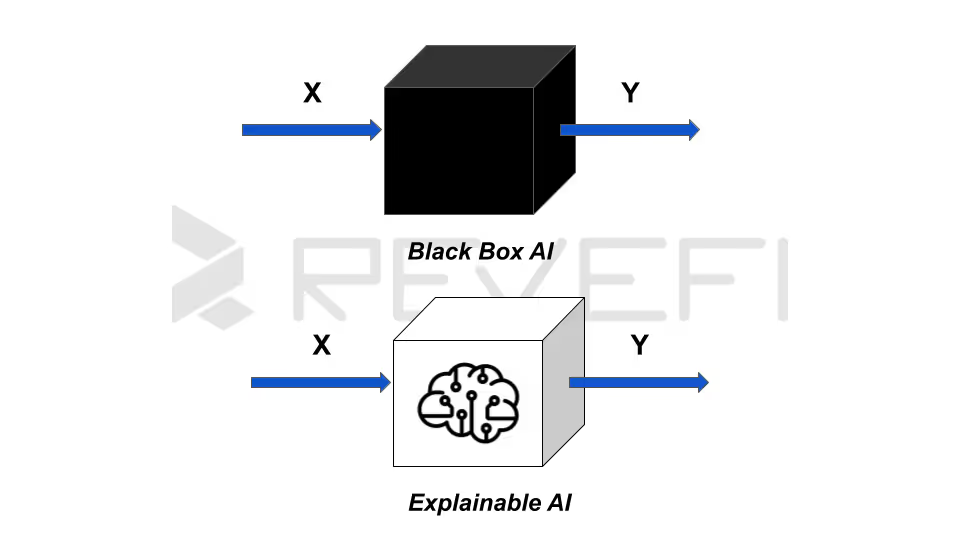

Why the "Black Box" approach is a Business Liability

AI scaling is fundamentally an operational challenge because AI systems are dynamic; they drift, degrade, or fail silently over time. Treating AI as a "black box" creates multi-layered risks: it reduces user trust, prevents timely debugging, and accelerates ethical harm by concealing biases.

When AI is merely a prototype, some opacity is tolerable, but when it is underwriting loans or routing supply chains, opacity becomes a liability. You cannot fix what you cannot see. As AI scales, these blind spots get expensive fast, hiding errors and operational regressions that demand continuous observability.

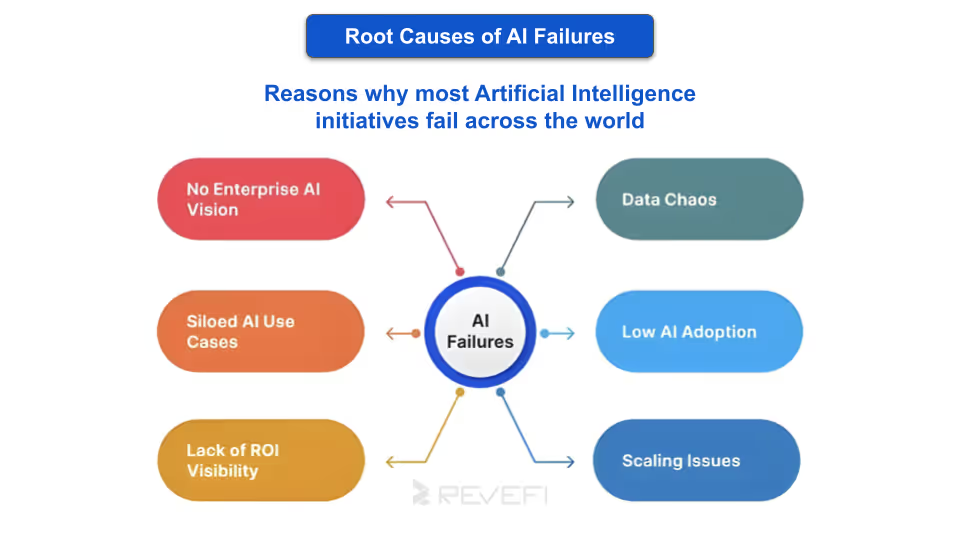

Common Failure Points in AI Systems

AI systems tend to fail in specific patterns. Data distributions shift silently due to seasonality or user behavior, while features degrade because the world changes faster than the engineering pipeline. Training-serving skew and pipeline outages can also create stale feature vectors that compromise predictions.

Observability acts as the operational root cause analyst, using telemetry to pinpoint the exact failure location. Platforms like Revefi are designed to immediately detect these upstream data failures, which can cost millions if left unchecked.

Designing Observability from Day One

A common mistake is treating observability as an afterthought and adding it only after systems are deployed, when gaps in visibility are already baked in. A more effective approach is to make observability a core foundation, with automatic pipeline instrumentation and lineage that links every prediction back to its origin.

Observability should be viewed as essential infrastructure. Establishing system baselines defines normal behavior, making anomalies easier to detect, while feature stores must continuously record transformations. Revefi automates the rollout of millions of monitors, delivering built-in, zero-touch observability that scales by default.

Mastering Drift: Data, Model, and Concept

Drift matters because AI systems live in the real world and the real world is dynamic. AI models are trained on a snapshot of reality: historical data, past behavior, yesterday’s patterns. Over time, those patterns change. Customer behavior shifts, markets move, seasonality kicks in, data sources evolve, and pipelines break in subtle ways. When the data feeding a model no longer matches what it was trained on, the model’s assumptions quietly become wrong. That’s drift.

Detecting drift is relatively easy; the trick is knowing which drift actually matters. Statistical drift can produce false positives, while concept drift happens slowly and subtly. Often, model drift only shows up after accuracy has already dropped.

Best practice requires moving beyond simple averages to rigorous statistical methods. The most effective systems correlate statistical drift, model performance drift, and concept drift to provide a true picture of system health.

Transparency, Accountability, and Explainable AI

Observability provides the essential data infrastructure for Explainable AI by capturing a comprehensive, non-repudiable audit trail. While explainability tells you why a prediction was made, observability tells you whether the conditions around that prediction were valid.

You cannot achieve real accountability without both. This infrastructure enables us to continuously monitor fairness metrics and automatically divert low-confidence decisions to human review, ensuring alignment with ethical objectives.

Navigating the Tooling Landscape

While open-source tools offer good starter building blocks for specific tasks, enterprise AI requires a unified approach. Scaling in a multi-cloud environment demands unified ingestion of logs, automated anomaly detection, and compliance-ready audit logs.

A key differentiator for enterprise platforms like Revefi is FinOps and Cost Optimization, which links technical metrics directly to financial metrics to automate cloud cost optimization. This depth turns operational visibility into guaranteed ROI.

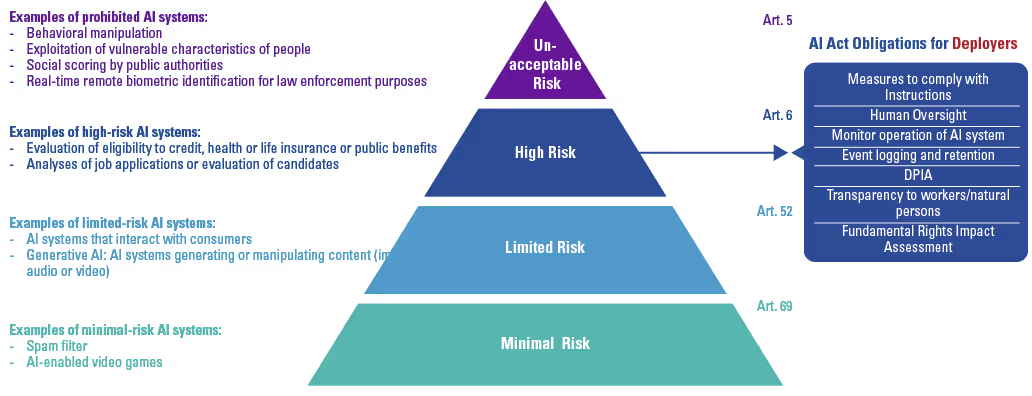

Regulatory Alignment (GDPR & EU AI Act)

Observability is the technical mechanism for demonstrating compliance, transforming static policies into continuous, executable controls. For example, the EU AI Act mandates continuous logging for traceability and rigorous assessment of data quality and bias for high-risk systems.

Observability provides the verifiable data streams necessary to satisfy these legal requirements. Ultimately, it is not just good engineering practice; it is essential risk mitigation.

The Future: Unified and Autonomous

The next decade of AI observability will see the unification of the entire AI stack from data, features, models, and agents and acted up through a single lens. We are moving toward autonomous detection and remediation, where systems identify root causes and propose (and make) fixes automatically.

This includes observability for LLMs and agents, monitoring reasoning chains and context windows, not just predictions. Revefi is building toward a future where we merge signals into a context-aware layer that understands intent and causality.

Key Recommendation

Design in data, model, security, and cost observability to build trustworthy AI products that solve use cases with positive ROI.

You can view the entire webinar here: From Black Box to Glass Box: Observability for Scalable AI Systems