I recently had the privilege of hosting a live session with John Holdzkom and Dan Greenberg from Verisk to discuss their organization's journey toward unifying FinOps and Data Observability.

What unfolded was a masterclass in how large enterprises can tackle the dual challenges of data platform cost optimization and operational efficiency without sacrificing performance or user satisfaction. Verisk’s experience offers a definitive blueprint for how data teams should handle this shift at a massive scale.

The Challenge of Scale and Cost

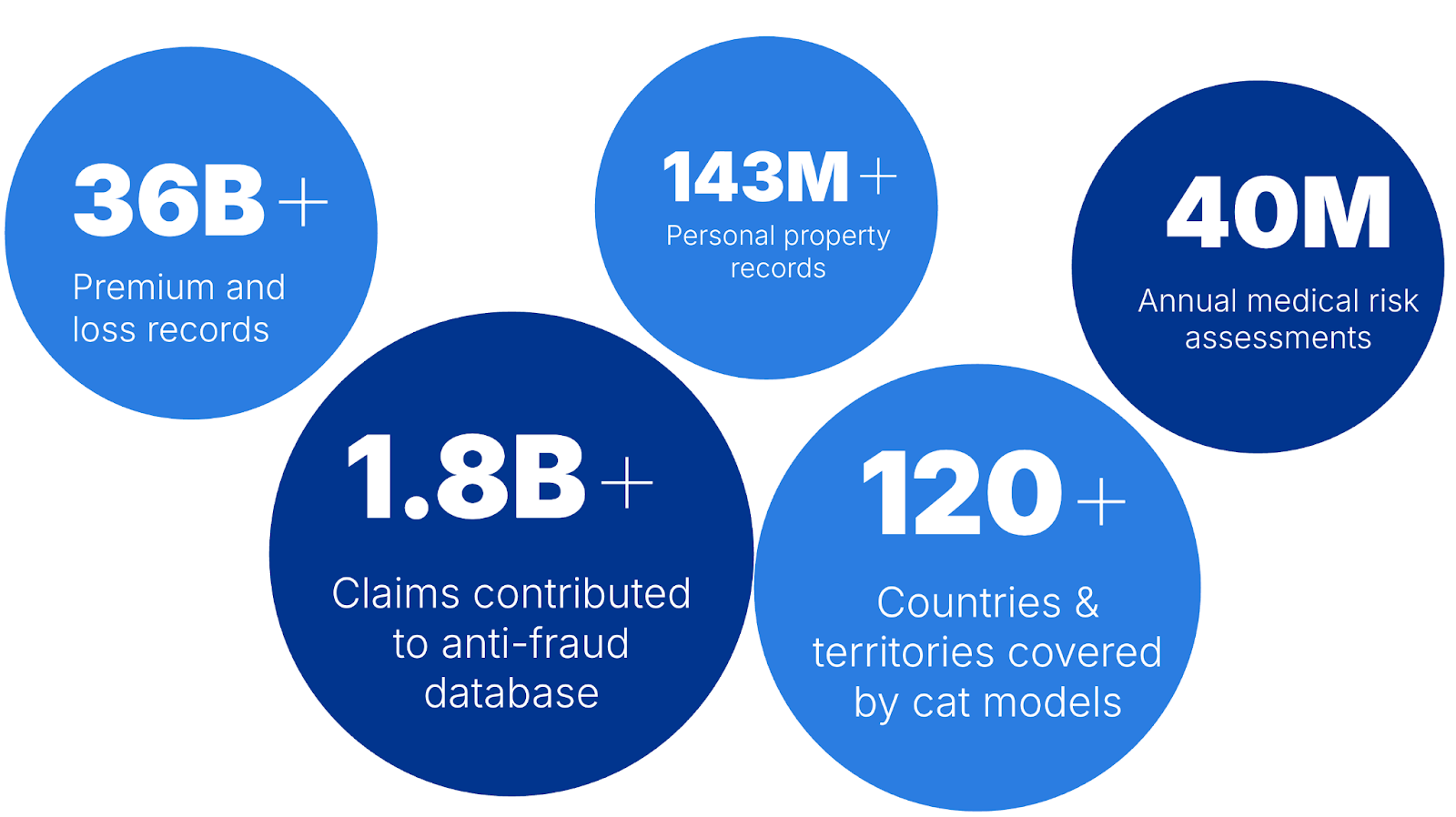

Verisk is a global leader in the insurance industry and isn't a typical tech company. They're deeply embedded in the workflows of insurance carriers worldwide, processing billions of rows of data across premium records, property data, claims, and fraud databases. With offices around the globe, they support critical business functions including underwriting, claims processing, catastrophe risk assessment, regulatory compliance, and anti-fraud solutions. To appreciate the complexity of their operation, you have to look at the numbers.

John Holdzkom, AVP of Data Technologies at Verisk, set the stage by describing their data landscape. For five to six years, they've been running their analytics workloads on Snowflake, and like many organizations experiencing data growth, their utilization and consequently increasing costs.

For a company of this magnitude, every compute cycle in Snowflake has a significant financial footprint. When Verisk moved to the cloud, they gained unprecedented flexibility, but they also faced the "visibility paradox." As utilization grew, high-level reports from the "Account Admin" view didn't provide enough granular detail for individual developers to see the financial impact of their SQL queries.

What made Verisk's situation particularly interesting was the sensitivity and integrity requirements around their data. Any tool touching their systems would need to pass rigorous security reviews. This is where the concept of "zero touch" became critical in their evaluation process.

The Zero-Touch Onboarding

When Verisk began exploring optimization solutions about two years ago, they needed something that could deliver value without requiring extensive reviews or implementation overhead. John described their onboarding experience with Revefi: a single 30-minute Zoom call where their DBAs created the necessary roles with correct metadata-only permissions, and they were operational.

No data touch. No extended implementation. No security headaches.

This ease of deployment was transformative for an organization where anything touching sensitive data typically requires extensive vetting. The metadata-only approach meant Revify could access account usage information and operate warehouses without ever touching the actual data flowing through their systems.

Identifying the "Rube Goldberg" Query and Hidden Waste

During our session, Dan Greenberg, the Snowflake Data Platform Owner at Verisk, shared several examples of the inefficiencies that occur when data teams lack real-time feedback. He noted that his success in saving money has occasionally put him on "Snowflake’s naughty list”. From my perspective, Dan is a Verisk hero because he has helped free up budget for new innovation, Generative AI and Data use cases.

One specific inefficiency Dan identified was what he calls "Rube Goldberg queries." These are queries that are functionally correct but architecturally complex and wasteful. For instance, he discovered a query on a multi-billion row table that used an EXCEPT clause to filter data. By simply changing that to a standard WHERE clause, the execution time dropped from over an hour to just a few minutes, drastically reducing credit consumption.

Other common pitfalls we discussed included:

- Warehouse Sizing Mismatches: Manually scaling a warehouse to "Large" for a one-time issue and forgetting to scale it back, leading to thousands of dollars in monthly waste.

- The Stored Procedure "Black Box": Not being able to see which specific "child query" inside a complex procedure is causing a bottleneck.

The Data Observability and Quality Dimension

While cost optimization was Verisk's initial focus, John highlighted an unexpected benefit: automated data observability. Without writing custom monitors, they now have thousands of monitors tracking hundreds of thousands of tables containing trillions of rows, processing millions of queries monthly.

These monitors check for data freshness, completeness, and anomalies, with alerts delivered through their existing communication channels via Microsoft Teams and email. This monitoring infrastructure, which would have required significant engineering effort to build and maintain, came as an integrated capability.

Strategic Wins in FinOps and Performance

By utilizing Revefi, Verisk was able to implement several strategic features that manual management simply couldn't scale:

- Autonomous Warehouse Management: The system continuously analyzes historical usage to "right-size" resources. Verisk has realized significant raw cost savings on managed warehouses.

- Threshold Management: Verisk established minimum sizes for critical workloads to prevent expensive "timeouts”. I.e., the worst kind of waste where you pay for compute that never returns a result.

- Schedule Overrides: For planned heavy-load periods, like weekend full loads, the team can proactively scale warehouses for a specific window and then return to autonomous management.

- Stored Procedure Visibility: For the first time, Dan and his team can see every step of a stored procedure, identifying precisely where time and money are being spent.

Redefining ROI: Saving Time and Money

The financial results have been compelling. Verisk’s investment in unified FinOps and observability has delivered a >100% ROI on their licensing costs, essentially paying for itself through identified savings.

But the value goes beyond the monthly bill. In the insurance industry, data quality is a risk management priority. Verisk used our AI agent to auto-deploy thousands of monitors across trillions of rows and hundreds of thousands of tables. This resulted in a 99% reduction in manual monitoring effort.

The Future of the Agentic Data Office

What began two years ago as a cost optimization initiative at Verisk has evolved into a comprehensive approach to data platform management. They're saving significantly on warehouse costs while increasing utilization. They have automated monitoring across trillions of rows of data. Their teams can investigate and resolve issues without waiting for central IT intervention.

Perhaps most importantly, they've achieved something rare in enterprise IT: a solution that paid for itself through cost savings while simultaneously improving operational efficiency and user satisfaction.

As we look toward the future, the role of the data team is shifting. It’s no longer just about building pipelines; it’s about architecting reusable, efficient platforms with positive ROI. Verisk is a prime example of an organization leading this change. By automating the mundane tasks of performance tuning and spend tracking, they’ve redirected their engineering talent toward higher-value initiatives like Generative AI.

In the modern data landscape, cost, performance, and quality are inextricably linked. You cannot optimize one in a silo. Unified FinOps and observability driven by autonomous, agentic AI is a way to manage the complexity of a global enterprise.

I want to thank John and Dan for sharing their journey. At Revefi, we’re proud to be part of their journey as they continue to innovate for the global insurance ecosystem