Cloud data warehouses like Snowflake, Databricks, Google BigQuery, and AWS Redshift are now the backbone of AI initiatives, data analytics and data pipelines that enable enterprises to store, process, and query petabytes of data at scale.

However, this flexibility and scalability come with a significant challenge: unpredictable and rapidly growing costs. The shift to consumption-based pricing models means organizations often pay for more compute, storage, and data transfer than they realize. Without fine-grained visibility and real-time controls, costs spiral out of control!

This inexplicably leads to budget overruns, inefficient resource usage, and delayed financial reporting.

Why Do Cloud Data Warehouse Costs Escalate?

To understand the issues, it’s important to analyze the cost drivers for platforms like Snowflake, BigQuery, and Redshift:

1. Compute Consumption

- Warehouse Scaling in Snowflake: Snowflake’s virtual warehouses auto-scale to handle concurrent workloads. Without constraints, clusters remain oversized or underutilized.

- Slot Allocation in BigQuery: Misconfigured reservations can lead to underused slots or burst billing at on-demand rates.

- Concurrency Scaling in Redshift: Sudden spikes trigger additional clusters billed per second.

2. Storage Growth

- Persistent tables, intermediate datasets, and historical partitions accumulate over time.

- Unused data retention policies inflate storage bills.

3. Query Inefficiencies

- Poorly optimized SQL (e.g., cartesian joins, non-pruned partitions) causes excessive scan costs.

- Duplicate or redundant queries from multiple teams increase compute load.

4. Data Movement

- Cross-region transfers or frequent extraction into downstream tools add hidden egress charges.

5. Idle Resources

- Warehouses left running during off-peak hours or test environments not decommissioned quickly.

Traditional monitoring tools provide point-in-time visibility, but lack the predictive and prescriptive intelligence needed to fix these inefficiencies in real time. This is where Revefi’s AI Agent provides a fundamental advantage

Why is AI Critical for Cost Optimization?

Manual cost tuning in cloud warehouses is insufficient due to scale and volatility:

- Thousands of queries per hour make manual review impractical.

- Dynamic scaling policies mean cost behavior can shift hourly.

- Team-level usage patterns are hard to correlate without automated anomaly detection.

Properly implemented AI systems provides a closed-loop feedback system to:

- Observe: Continuously monitor metrics in real time.

- Predict: Forecast cost and detect anomalies proactively.

- Act: Automatically apply optimization actions with minimal human input.

- Learn: Improve future decisions based on outcomes of prior actions.

This adaptive approach ensures sustained savings, even as workloads and data volumes evolve. Revefi’s AI Agent for Data Spend Optimization addresses this challenge by combining AI, machine learning, real-time monitoring, and automated optimizations to minimize spend while maintaining or improving query performance.

Revefi’s AI Architecture: An Overview

Revefi’s cost optimization framework is built around three core capabilities: observability, prediction, and automation. Here’s how each layer works technically.

1. Observability: Granular, Real-Time Telemetry

Revefi integrates directly with cloud warehouses using metadata, including:

- Query execution statistics: CPU time, I/O, scan bytes, execution stages.

- Warehouse utilization: credit consumption, concurrency, auto-suspend/resume events.

- Storage metrics: per-table growth rates, partition usage, time-travel data retention.

- Cost breakdown: credit burn per workload, team, and query pattern.

This data is streamed into a real-time analytics engine capable of handling millions of events per day. Unlike static dashboards, Revefi runs thousands of time-series models for every cost, performance, quality dimensions, enabling instant anomaly detection and historical trend analysis.

2. Prediction: AI for Cost Forecasting

At the core of Revefi is its AI cost prediction engine, which uses advanced techniques such as:

- Multivariate time series forecasting for workload patterns.

- Unsupervised anomaly detection for sudden spikes in spend.

- Reinforcement learning models that simulate optimization actions, and learn policies in real-time.

These models don’t just report anomalies. They predict future spend based on historical patterns, query growth rates, and expected data ingestion. This allows organizations to proactively budget and allocate resources rather than react after costs are incurred.

3. Automation: AI for Cost Optimization Actions

Our AI Agent for Data Spend Optimization cuts cloud data costs, and boosts performance across platforms like Snowflake and BigQuery.

Its automation layer translates insights into real-time actions, such as:

- Automatically resizing warehouses based on query load

- Scaling clusters efficiently

- Pausing idle resources to prevent waste

- Suggesting optimizations like partitioning or caching

- Flagging redundant tasks

Storage is managed through smart retention policies and automatic archival to low-cost tiers. Real-time predictive alerts warn about unusual spend and support “what-if” simulations for future scaling needs.

Conclusion

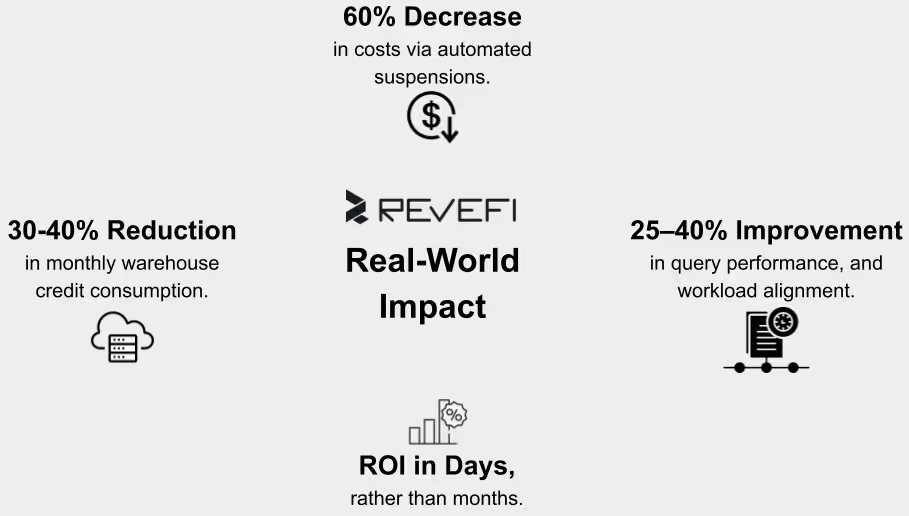

Cloud data warehousing costs are inherently variable, but they don’t have to be unpredictable. By combining AI-powered observability, predictive analytics, and automated optimizations, Revefi enables enterprises to control spend without compromising performance.

For engineering and FinOps teams managing large-scale Snowflake, BigQuery, or Redshift environments, this approach is an operational necessity for scaling analytics sustainably.

Ready to optimize your cloud data warehouse spend with AI?

Learn more about Revefi’s AI Agent for Data Spend Optimization and start reducing your Snowflake, BigQuery, and Redshift costs today.

Related Reading

To get a better picture of data cost management and warehouse optimization, check out these other related blogs: