Imagine making a million-dollar business decision, only to realize later that it was based on inaccurate data.

Poor data quality isn’t just an inconvenience; it’s a massive financial drain. According to Gartner, bad data costs businesses approximately $12.9 million annually. Another survey by Forrester reveals that over 25% of organizations report annual losses exceeding $5 million, with 7% reporting losses of over $25 million due to poor-quality data. Despite these alarming numbers, many companies still rely on manual data quality checks, which are time-consuming, error-prone, and impossible to scale.

Think about it. Over 402.74 million terabytes of data are created daily, yet data engineers are still expected to sift through endless SQL queries, hunting for missing values and inconsistencies. Even if they manage to clean today's data, new records keep pouring in tomorrow, making it extremely hard to keep up. And with AI systems increasingly relying on this data to make decisions, bad data not just causes errors, it scales them.

The solution? Handing off data quality monitoring to an automated tool. This not only ensures robust data quality in the era of big data but also brings significant financial benefits. In this blog, we will explore why organizations must transition from manual checks to automated systems, a move that can potentially save millions in avoided errors and operational efficiency.

Why Are Manual Processes Failing?

Manual data quality checks are rapidly becoming obsolete. These processes aren’t wrong; they are not enough, especially given the volume, velocity, and variety of big data.

As businesses scale and data starts flowing in from multiple sources, maintaining accuracy manually becomes unmanageable. Errors inevitably slip through, leading to inaccurate forecasts and financial losses. And that’s precisely what happened to Samsung a few years ago.

In 2018, Samsung Securities, the stock trading arm of Samsung, suffered a massive financial blow due to a manual data entry error. The company accidentally distributed shares worth $105 billion to employees, which were 30 times more than the total outstanding shares, leading to a significant loss in market value and regulatory actions.

Here’s what happened: An employee mistakenly entered "shares" instead of "won" while processing dividend payments. Instead of distributing 2.8 billion won (approximately USD 2.1 million), they mistakenly issued 2.8 billion shares, valued at a staggering USD 105 billion, to employees in a stock ownership plan. Imagine that!

By the time the company caught the error and attempted to prevent employees from selling off the ghost shares, it was already too late. Within just 37 minutes, 16 employees had sold off five million shares, costing the company $187 million.

As a result of this mistake:

- Samsung Securities’ stock dropped by 12%, wiping out nearly $300 million in market value

- The company faced strict regulatory actions, including a six-month ban on acquiring new clients

- Major customers raised concerns about the company’s data security and risk management

This is the reality of manual processes. They are slow and prone to human error. And that is why businesses need automation.

Challenges & Limitations with Manual Data Quality Checks

Manual data quality checks involve writing SQL queries, applying dbt rules, and spot-checking datasets to identify errors. Although these checks get the job done, they fall short in many ways, including:

- Limited scalability: Manual processes cannot handle the vast datasets and rapid data growth that characterize modern data environments. As data volumes increase, manual data checks become increasingly time and resource-intensive, making it challenging to maintain data quality at scale.

- High error rates: Human error is common in manual data management. Data engineers can make honest mistakes during data entry, inspection, or correction, leading to inconsistencies in data. These errors can have a significant impact on final decision-making.

- Time-consuming: Manual data quality checks are incredibly time-consuming, as data professionals have to spend countless hours manually inspecting and correcting errors. This diverts precious workforce from more strategic activities and slows down data processing.

- Inability to detect real-time anomalies: Manual data quality checks are typically performed periodically. This makes it difficult for data professionals to detect and respond to real-time data discrepancies. By the time issues are identified, they may have already impacted business operations, leading to lost revenue.

- Compliance and Governance Risks: Data has to comply with data privacy regulations such as CCPA and GDPR. Continuously evolving regulatory requirements make it hard for manual processes to keep up, increasing the risk of potential penalties.

What Are Automated Data Quality Checks?

Automated data quality checks involve using automated tools, techniques, and systems to ensure all data in an enterprise is complete, accurate, consistent, and up-to-date. These checks can be scheduled to run automatically or triggered when new data arrives, flagging issues such as missing values, duplicates, format errors, and anomalies.

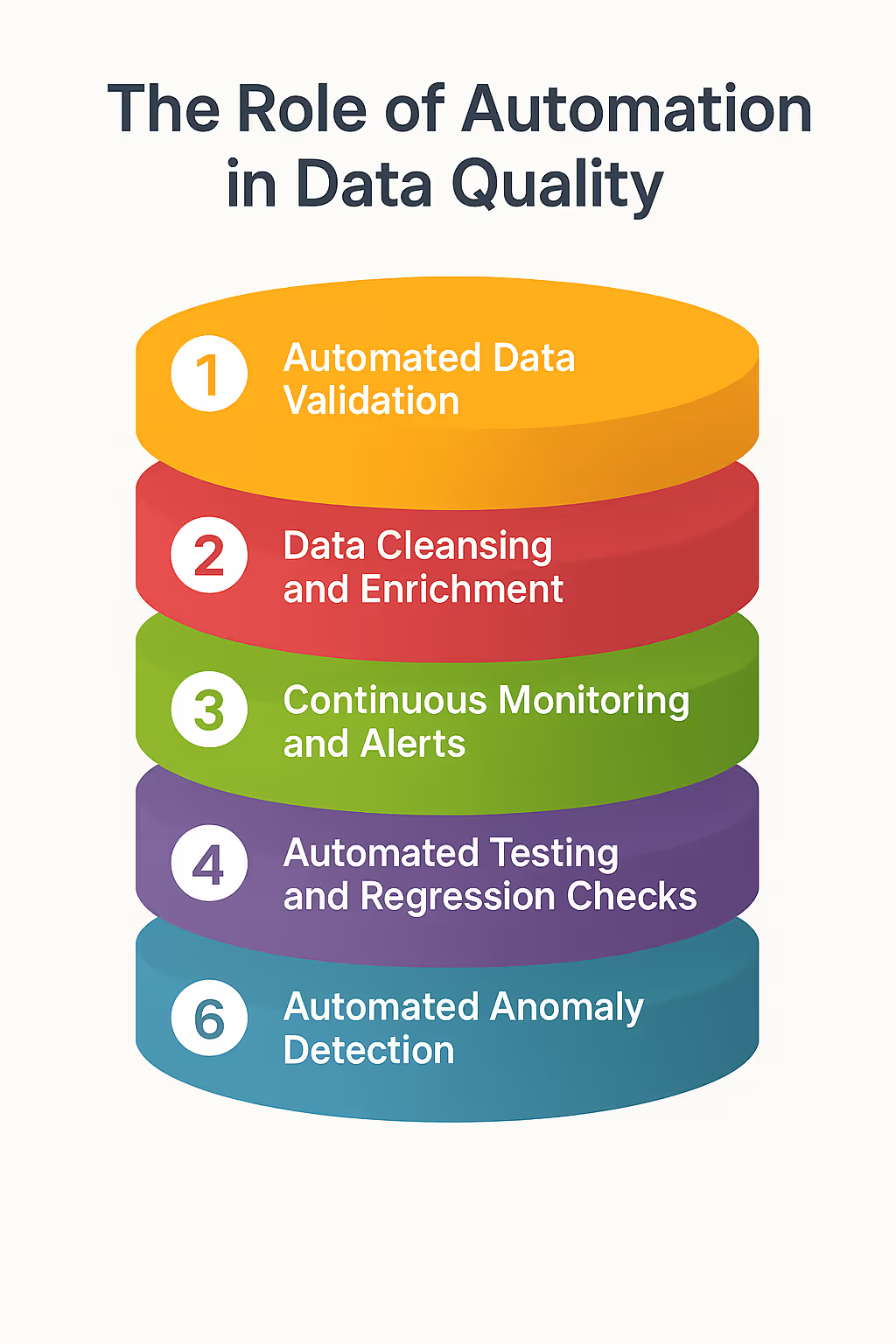

Typically, automation of data quality checks includes the following processes:

- Data cleansing- Identifying and correcting inaccurate data from a dataset or database.

- Data validation- Ensuring the gathered data is accurate, consistent, and relevant to the context.

- Data profiling- Examining data in a specific database and generating informative summaries about this data.

- Data de-duplication- Eliminating redundant data from the database.

- Data standardization- Ensuring the data is consistent and follows specific formatting rules.

- Data enrichment- Adding more value to data by supplementing it with helpful data from external sources.

Automated data quality checks are a crucial aspect of data management. They are particularly relevant in today’s scenario of big data applications, where manual data checking is often not a viable option.

Revefi is an excellent platform for automating your data quality checks and obtaining end-to-end data observability within a few minutes. Unlike traditional manual checks that rely on predefined rules, Revefi uses machine learning to proactively detect inconsistencies across vast datasets. This proactive approach ensures superior data quality and gives you peace of mind about the accuracy of your data.

Need for Automated Data Quality Monitoring

Despite advancements in data processing, many enterprises still depend on SQL-based rules and spreadsheets to monitor data quality. These manual processes aren’t just time-consuming; they are also unsustainable.

Let’s talk about a typical data quality workflow:

Business teams define rules in spreadsheets, which developers then translate into SQL queries. When business requirements change, these rules must be updated across multiple systems, often requiring hours or even days of development effort.

Analysts must first assess the data and then coordinate with developers to ensure proper integration if a new data source is introduced. This cycle repeats endlessly, making it nearly impossible to scale.

Imagine a company managing hundreds of data sources, each with its own attributes and quality standards. Maintaining all of these manually is a logistical nightmare.

Every schema change and rule modification adds complexity, increasing costs and straining IT resources. In some cases, deploying a single new rule can take an entire workday.

This is why enterprises need automation. Automated data quality tools eliminate the need for manual coding and allow enterprises to adapt to changes instantly. Instead of relying on spreadsheets and SQL-heavy workflows, companies can use AI-driven tools that continuously monitor data, flag inconsistencies in real-time, and ensure accuracy without needing constant developer intervention.

Benefits of automated data quality checks

There are numerous benefits of automating data quality checks. Here are the most notable ones:

- Easier to set up: Automated data quality checks are easier to set up. There is no need to write manual rules or run dbt tests often prone to human error.

- Increased efficiency and cost savings: Data quality check automation frees up resources and reduces costs associated with manual efforts. Data professionals can thus focus on more strategic activities while automated tools handle monotonous data quality tasks.

- Improved data compliance: Regulations such as GDPR have mandatory standards for integrity and completeness. By automating your data quality checks, you can rest assured that your data quality standards are being met.

- Highly scalable: Automated tools can easily handle vast datasets with ease, making them ideal for enterprises that continue to grow in size and data complexity.

Key data quality checks

Enterprises can implement a wide range of data quality checks to ensure data accuracy and consistency.

The most popular ones include:

- Completeness: This check verifies that all required data fields are populated with no values missing.

- Consistency: This confirms that all data is consistent across various data sources and systems.

- Validity: This check validates whether the data conforms to predefined rules, formats, and business requirements.

- Uniqueness: This identifies and eliminates duplicate records to ensure data integrity and avoid redundancy.

- Accuracy: This check ensures the data values are accurate and reflect reality.

- Structural: This check ensures all the data is adequately organized and formatted based on the database schema.

- Timeliness: This monitors the freshness of data to ensure it is up-to-date and relevant for decision-making.

How do we implement automated data quality checks?

Automated data quality checks are far easier to set up as compared to manual ones. You must determine what needs to be checked, followed by syncing the right automation tool with your dataset. Let’s learn about the process in detail below:

Step 1: Outline Your Data Quality Objectives

To begin with, you must clearly define your data quality objectives, criteria, and metrics.

For instance, let’s say you are a medical facility that values data accuracy the most. You want to ensure that all your patient records are error-free. This means checking all records for duplicate entries, incorrect patient IDs, missing medical history data, etc.

Clearly defining your objectives, in the beginning, will help you set up relevant rules and automation parameters.

Step 2: Choose the Right Data Quality Automation Tool

Not all automation tools are created equal. There are a few tools that easily integrate with your existing data infrastructure and offer advanced data quality monitoring capabilities.

To make sure you choose the right tool, look for the following:

- Integration capabilities: Can it connect with your data warehouses, datasets, or cloud storage?

- Data cleansing capabilities: Does it offer data cleansing capabilities such as validation, standardization, and enrichment?

- Scalability: Can it handle growing volumes of data?

- Real-time monitoring: Does it catch errors in real time?

- AI-driven anomaly detection: Does it offer ML-based insights or only rule-based validation to ensure data quality?

Choosing the right automation tool may appear challenging, but investing time in researching and picking the best tool for the job is worthwhile. Revefi is an exceptional data quality check automation tool that can help you get started without any heavy coding requirements. With automated data observability and continuous performance optimization, it can maximize the ROI of your data.

Step 3: Automatically Establish Data Quality Rules

Next, you must automatically enforce a set of data quality rules that align with your defined objectives, such as data accuracy, completeness, or uniqueness.

For instance, say an e-commerce company wants to prioritize the completeness of data. They can implement automated checks to ensure that every record entering their data warehouse from the CRM includes essential fields like customer name, email address, contact number, and order details.

If any of these fields are missing, the system can trigger an alert or flag the record for review before it’s used in analytics or customer outreach.

Step 4: Set Up Real-Time Alerts and Reporting Using Automated Tool

Once you set up automated checks, use an automated tool to notify the right teams when an issue arises. This ensures that problems are caught much before they impact critical business decisions.

Consider implementing role-based notifications so relevant teams can receive alerts based on issue type. Make sure these alerts are sent through a channel that your team uses. For instance, Revefi sends real-time alerts to the relevant teams on Slack.

Additionally, dashboards can be used to visualize data quality trends and quickly spot recurring issues.

Step 5: Monitor and Iterate

With evolving datasets, conducting periodic audits to refine rules and adapt to new data challenges is important. Analyze past errors to adjust data quality rules for improved accuracy.

Revefi platform incorporates machine learning to detect new patterns of data inconsistencies. Click here to know more.

How Revefi Helps Solve the Data Quality Challenge?

Revefi is an AI-powered platform that helps enterprises meet modern data management challenges. It lets you maximize the ROI of your data with effective identification and resolution of data issues.

With automated real-time monitoring, Revefi detects anomalies instantly and sends immediate alerts, enabling teams to address issues before they escalate into costly errors.

Additionally, Revefi integrates with various data warehouses, lakes, and analytics tools, ensuring easy monitoring without disrupting existing workflows. For enterprises dealing with high-volume, fast-moving data, it offers a cost-effective and reliable solution to maintain accuracy and compliance. Plus, its intuitive design lets you get started in just five minutes.

Conclusion

In the world of big data, manual data quality checks can no longer suffice. Enterprises must embrace AI-driven automation to manage data quality at scale effectively.

Automated data quality check tools can help businesses ensure accurate, consistent, and meaningful data for effective decision-making. These tools eliminate human errors and streamline workflows, making them essential for modern businesses. So if you still haven’t invested in one yet, now is the time!

Revefi’s AI-powered Agent automates data observability and quality management, delivering 10x improvements in efficiency.

Click here to get started.