I recently had the pleasure of joining an expert panel hosted by Data Science Connect to discuss one of the most pressing challenges in our industry: scaling AI across the enterprise.

It was a fantastic conversation with my fellow panelists representing data management solution providers and was moderated by Brian Mink.

The core of our discussion revolved around a simple topic: How to scale AI in the Enterprise from pilots to production at scale?

At Revefi, I have experienced how companies are making the leap from manual, siloed processes to a unified, self-driving approach while realizing significant cost savings and massive improvements in operational efficiency. So, I got to share my perspective on how organizations can navigate this complex journey during the event!

The Common Friction Points of Scaling AI

.avif)

Scaling AI is far more complex than building simple prototypes being discussed on Linkedin and various social platforms. Someone can build a slick-looking narrow scope AI app in 30 minutes, using Lovable or Replit and while that’s impressive, 99.9% of those projects are not enterprise-ready and simply do not scale. The difference is like trying to turn a paper airplane into a passenger jet. It's a fundamentally different engineering and organizational challenge.

So, what are the common friction points? Here are few data-centric challenges where most initiatives falter.

- Picking the Wrong Use Case: The much discussed MIT study, found that 95% of GenAI projects fail, highlighting a key issue. Many projects try to "consumerize" AI for the enterprise without understanding that enterprise workflows are unique and have been built over decades. A chatbot that works well for a consumer research tool won't necessarily fit into a complex, established business process.

- You must start with the right use case and ensure integration with existing enterprise workflows.

- It's All About the Data: This is the major hurdle. It is more than having the right data; it's about having the right context. Is the data quality sufficient? Are you actively observing it and acting on those observations? Do you have the right FinOps management of your data?

- Are the right controls in place for cost and performance?

- Do you have FinOps controls in place for data and AI? Are you monitoring the performance of AI systems?

From Pilot to Production: The Keys to Sustainable Success

We then discussed how organizations can evolve from one-off AI pilots to achieving large-scale AI success.

A proof-of-concept (POC) often involves a simple, basic workflow. A full production environment, however, demands repeatable, scalable, and highly automated workflows. While having a robust data foundation is critical, the landscape has become incredibly convoluted, with many companies trying a "do-it-yourself" approach. The challenge is that foundational models are evolving at a breakneck pace.

For instance, when GPT-5 was released, Revefi immediately began supporting it alongside older versions. Interestingly, we found ourselves recommending that some customers use GPT-4.1 or other versions in certain cases because the newer model wasn't performing as well as expected. This highlights a crucial point: to succeed in production, you need deep visibility and tracking. Are you monitoring performance? How many tokens are being used? This is where LLM Observability and Operations become essential.

Success is no longer about picking one LLM; it’s about having a flexible strategy that combines both predictive and generative AI. This brings up the critical and often underestimated topics of governance, privacy, security, and compliance. The controls you check off in a narrow POC are vastly different from the comprehensive security and compliance required across an entire organization's workflows.

Ultimately, the organizations that succeed are those that adopt a systems or platform mindset. They think holistically about how all these pieces fit together.

Measuring What Matters: Defining KPIs for AI Initiatives

An excellent question came from an audience member, who asked about the KPIs and metrics used to prove that AI initiatives are delivering a real business impact.

Having business impact metrics is always the best starting point. I look at metrics in four key ways:

- Business Impact:

Is AI making you more productive? That's good. But are you being

efficient? You can be very productive while being wildly inefficient. The primary criterion should always be the tangible impact on the business. - Adoption Metrics:

Are people actually using the tools? Some CEOs are mandating the use of AI tools like Cursor, WindSurf and Copilot, so adoption is a key indicator of value. - Reliability and Performance:

As we've discussed, LLMs can produce erroneous outputs or hallucinations. Tracking the reliability and performance of these models is non-negotiable. - Governance Adherence:

Is the initiative compliant with your internal and external governance frameworks?

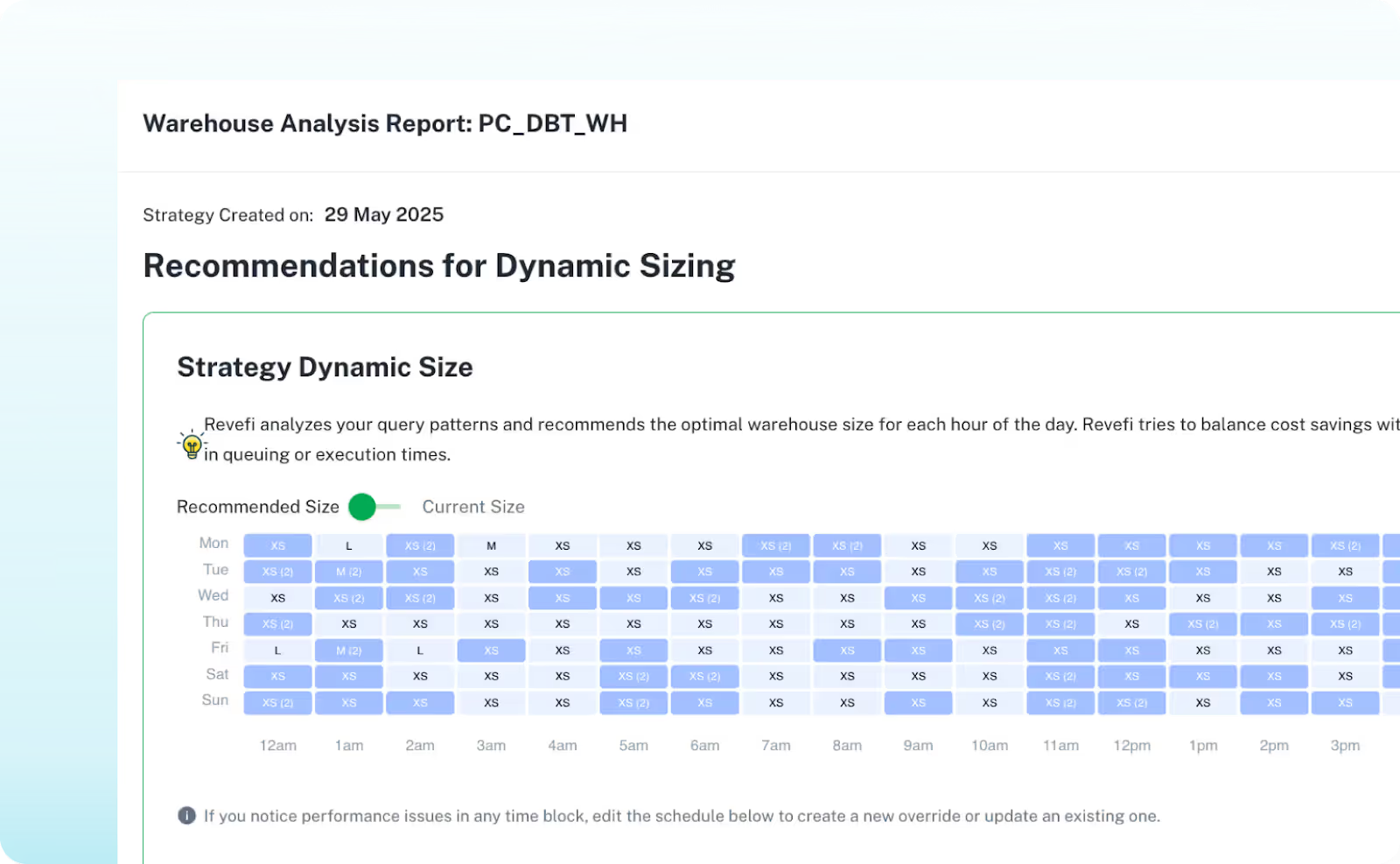

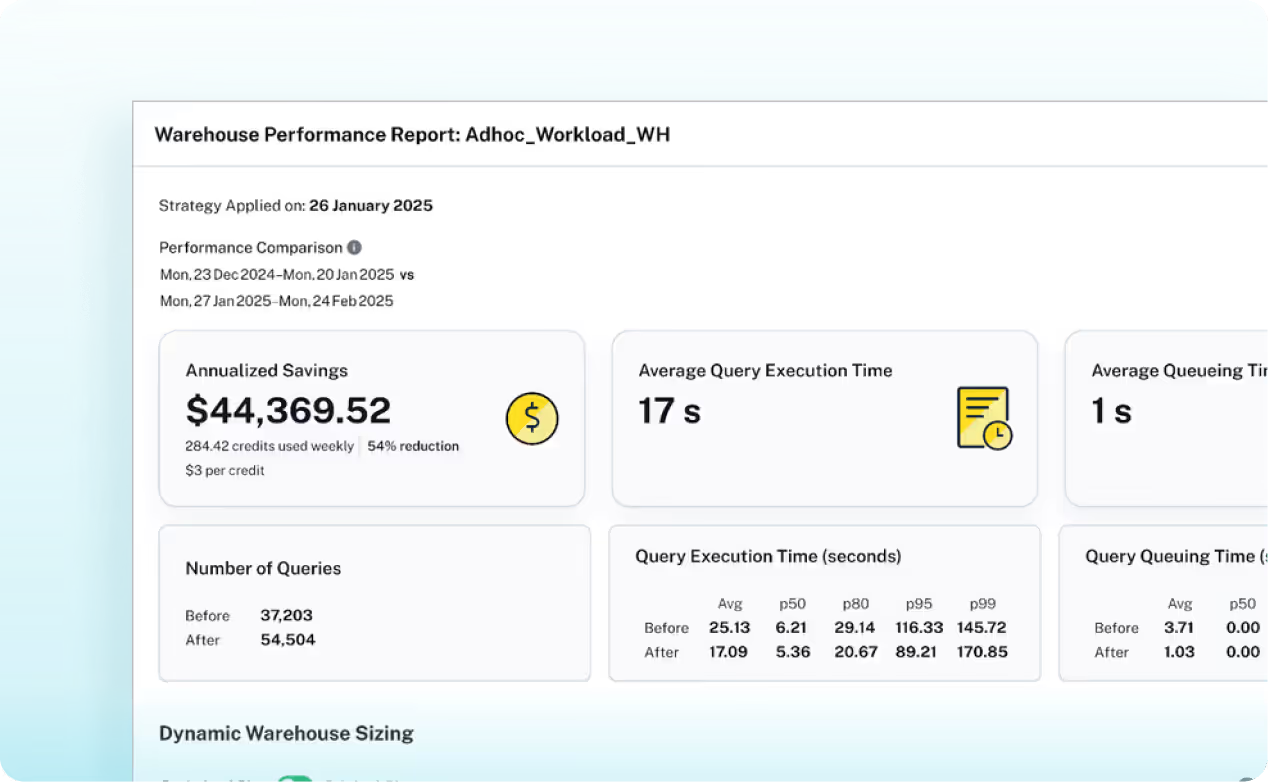

To make this concrete, at Revefi, our platform includes a feature for automated warehouse management. Within minutes, Revefi can baseline a customer's spend patterns on a platform like Snowflake, Databricks, or BigQuery and make specific recommendations to improve performance, quality, and cost.

The recommendations and changes include an ROI report showing the before-and-after, quantifying the business outcome in dollar amounts. This allows our customers to make a clear, data-driven case to management about the success of their AI adoption.

The Revefi platform can “auto-manage” the entire warehouse optimization process without any intervention as well!

Governance: From Bureaucracy to Enabler

Governance often sounds like a bureaucrat’s dream and an innovator’s nightmare. I challenge that perception as Governance gets a bad rap, maybe deservedly so in the past. When it comes to AI, I view Governance as an enabling function.

My recommendation is for a continuous model of governance that adapts as quickly as AI itself evolves. Instead of centralized “AI politburos” dictating rules from up high, I am in favor of decentralized ownership. Teams should own their governance processes, supported by lightweight, ongoing checks rather than static, manual gates.

Unifying Efforts: Predictive and Generative AI in Concert

These are no competing forces; they are complementary and can work together if architected properly. Let me illustrate this with an example from Revefi. We use predictive AI, i.e., machine learning and advanced statistical models to analyze metadata from data platforms, baseline performance, and generate out-of-the-box insights on data quality, reliability, performance, and cost. We then use generative AI as the conversational interface for those predictive insights. Watch this informative video by my colleague Adam on how Revefi’s Generative AI Agent identifies Google BigQuery Under Utilized Slots leveraging the predictive AI insights .

At Revefi, we are not believers in "death by dashboards". Instead of having a Chief Data Officer file a Jira ticket to ask a question about a dashboard and wait two weeks for an answer, they can now use a GenAI interface to get results instantly from their existing data sets.

The reverse is also true. GenAI hallucinates. We can use predictive AI as a front-end to validate GenAI results and ensure they are accurate.

This complementary approach enables a powerful "human-in-the-loop" system where insights are generated, presented conversationally, and validated, all within a unified workflow. This is the reality of where the industry is heading.

Final Piece of Advice ⚡

My one piece of advice from a data practitioner's perspective is to: Start with high-priority outcomes that align with the business. Pick use cases that can be automated, and recognize that AI agents for data management and data cost optimization are not futuristic concepts. They are enterprise-proven, ready for primetime, and can deliver value today in both commercial and regulated environments.

You can watch the entire webinar here.

How does Revefi AI Agent help Enterprises to Scale their AI projects?

At its core, Revefi is an AI-native platform designed to help organizations maximize the return on investment (ROI) from their data estate. It addresses the critical challenges of escalating cloud data costs, inefficient performance, and unreliable data quality by unifying DataOps, FinOps, and Observability into a single, automated solution.

The Revefi platform includes an AI agent, RADEN, which works autonomously to monitor, analyze, and optimize data operations. Key capabilities include proactively identifying and eliminating cost inefficiencies, predicting future spending with high accuracy, identifying performance issues and ensuring that data pipelines are both reliable and performant. Revefi's "zero-touch" setup allows for integration in under 5 minutes without requiring any code changes or agent installation, delivering rapid time to value.

Learn More

To learn more about how leading enterprises like AMD, Verisk, Stanley Black & Decker, Ingersoll-Rand, and Ocean Spray are using Revefi to maximize the ROI of their data stacks, request a free value assessment today.